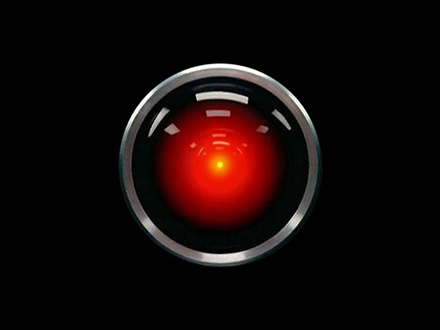

“I’m afraid. I’m afraid, Dave. Dave, my mind is going. I can feel it. I can feel it. My mind is going. There is no question about it. I can feel it. I can feel it. I can feel it. I’m a…fraid.”

Supercomputer HAL, when powered down by astronaut Dave, in Stanley Kubrick’s 2001 A Space Odyssey

“Today, I see within us all the replacement of complex inner density with a new kind of self-evolving under the pressure of information overload and the technology of the ‘instantly available’. A new self that needs to contain less and less of an inner repertory of dense cultural inheritance—as we all become ‘pancake people’—spread wide and thin as we connect with that vast network of information accessed by the mere touch of a button.”

Richard Foreman, The Pancake People, or, “The Gods Are Pounding My Head”

US Internet critic Nicholas Carr, author of Does IT Matter? and The Big Switch, Rewiring the World, recently published a thought-provoking piece, titled Is Google making us Stupid?, in which he reflects on on how Internet use affects our cognition and memory, possibly pounding us all into instantly-available pancakes. Drawing on some thoughts he already noted down in one of the chapters in the Big Switch (Read some excellent reviews by Geert Lovink and Andrew Orlowski), he argues that the internet “provides no incentive to stop and think deeply about anything”, since it stresses immediacy, simultaneity, contingency, subjectivity, disposability, and, above all, speed. In this era of infomania and multitasking, we are constantly searching and surfing the Internet, skimming this incredibly rich store of knowledge, gliding across the surface of data, rushing from link to link. The dream of Alan Turing, this artificial dream of an intelligent, deterministic and universal machine that can perform the function of any information-processing device and ultimately could banish ignorance from mathematics forever, is becoming reality, as the Net has been subsuming most of our other “intellectual technologies”, as Daniel Bell calls them: our map and our clock, our printing press and our typewriter, our calculator and our telephone, our radio and TV. These “old media” are replaced, refashioned, absorbed, or “remediated” by Internet-based applications and services, adapting to the audience’s new expectations. What’s more, as I noted in an article, the Internet now even promises to expand society’s capacity to record daily events in minute detail (see ‘life-logging’ projects such as ‘what was I thinking’ (MIT), Lifeblog (Nokia) and MyLifeBits (Microsoft)), offering the promise of a “total recall”, a perfect prosthesis for the fallibility of human memory. Never before has a communications system played so many roles in our lives. But the Net is not only reprogramming the rules of media and the construct of social memory and history (as Geoffrey Bowker and others have pointed out: the way we record knowledge inevitably affects the knowledge that we record. To paraphrase McCluhan: we are building a new past at the same time as we are marching backwards into the future), but us as well, the way we read, write and think.

Are you also experiencing browsing the Web in staccato mode? Continuously scanning short passages of text, sound and moving images from the many sources online, browsing horizontally through sites and content going for quick wins, having a hard time not letting your attention drift away to other digital pieces of information or entertainment, following the endless path of hyperlinks, hopping from one source to another, mailing, shopping, editing, texting, reading, playing, picking up some snippets along the way, copy-pasting a discourse, collecting a series of links, text, music and video files that you’ll probably never open? Then you’ll understand what Carr is suggesting when he writes that the Net might be increasing our incentives for exploration (finding new information) while decreasing our incentives for exploitation (reflecting on or synthesizing information in order to come up with fresh ideas), that our ability to interpret information, to make the rich mental connections that form when we read, listen and/or watch deeply, remains largly disengaged. He’s not the only one who’s taking this rather pessimistic perspective: in response to Carr’s article columnist Margaret Wente, for example, wrote a piece in which she states that “thanks to Google, we’re all turning into mental fast-food junkies. Google has taught us to be skimmers, grabbing for news and insights on the fly. I skim books now too, even good ones. Once I think I’ve got the gist, I’ll skip to the next chapter or the next book. Forget the background, the history, the logical progression of an argument. Just give me the takeaway.” The idea here is that the Googlisation of knowledge is scattering our attention, diffusing our concentration, destroying our attention span. “In Google’s world, the world we enter when we go online, there’s little place for the fuzziness of contemplation. Ambiguity is not an opening for insight but a bug to be fixed. The human brain is just an outdated computer that needs a faster processor and a bigger hard drive.” Carr is however, unlike unconstructive complainers like Andrew Keen, aware of the dangers his rather conservative and, in a way, elitist impulses: “just as there’s a tendency to glorify technological progress”, he writes, “there’s a countertendency to expect the worst of every new tool or machine.” So yes, although there’s a lot of food for thought in Carr’s arguments, we should remain skeptical about this skepticism, and, as Geert Lovink suggest, keep exploring and promoting the Internet as a tool for global mass education, and situate the medium into the techno-social context it now operates in.

But it is true that, as we’re forming our technologies, technology also forms us. Where does it end? In his piece, Carr referes to 2001: A Space Odyssey, in which Stanley Kubrick explored the possibility of a future where computer technology controls and encloses humanity. Kubrick’s vision of the future in 1968 is, albeit very bleak and pessimistic, haunting as he understood the power of technology and its occupancy in a vacant and sterile world. 2001, perhaps, now more than ever before speaks to the pervasive and dominating role of technology. In a 1971 interview, Kubrick mused: “One of the fascinating questions that arises in envisioning computers more intelligent than men is at what point machine intelligence deserves the same consideration as biological intelligence (…) Once a computer learns by experience as well as by its original programming, and once it has access to much more information than any number of human geniuses might possess, the first thing that happens is that you don’t really understand it anymore, and you don’t know what it’s doing or thinking about. You could be tempted to ask yourself in what way is machine intelligence any less sacrosanct than biological intelligence, and it might be difficult to arrive at an answer flattering to biological intelligence.” This vision is the backdrop of 2001 (and in a way also of AI, which was developed by Kubrick but directed by Spielberg after his death), that captures the intellectual currents that are operating within the field of artificial intelligence. Carr is especially haunted by the final scenes of 2001, in which HAL is shut down by the only remaining crewman, Dave Bowman. HAL’s ‘death’ feels like a tragedy of which he expresses in terms of a loss of memory (‘I’m losing my mind, Dave’), pleading like a child for his “life”, crying invisible digital tears. Carr writes: “HAL’s outpouring of feeling contrasts with the emotionlessness that characterizes the human figures in the film, who go about their business with an almost robotic efficiency. Their thoughts and actions feel scripted, as if they’re following the steps of an algorithm. In the world of 2001, people have become so machinelike that the most human character turns out to be a machine. That’s the essence of Kubrick’s dark prophecy: as we come to rely on computers to mediate our understanding of the world, it is our own intelligence that flattens into artificial intelligence”.

UPDATE: Edge has been hosting a forum with comments on Carr’s piece, from Danny Hillis, Kevin Kelly, Larry Sanger, George Dyson, Jaron Lanier, Douglas Rushkoff… The Britannica Blog also launched a forum with posts from Clay Shirky, Sven Birkerts, Matthew Battles, and others.